Background:

I wanted to ensure content for a new feature (Well-Being Club) was findable, usable, and understandable.

Test:

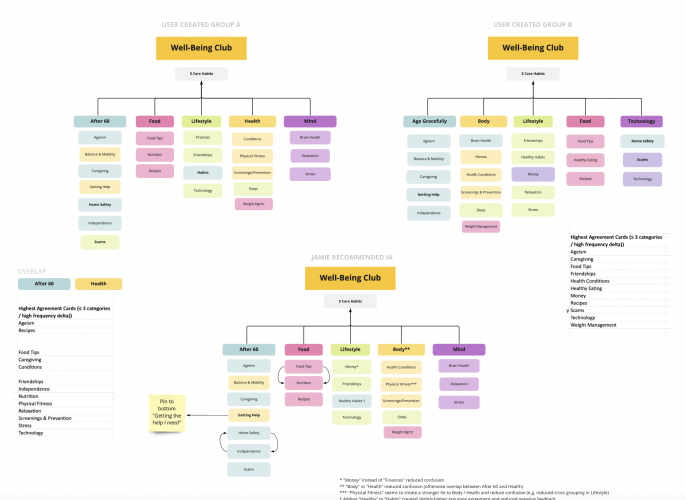

I started with a card sort test for two audience groups (A and B) to help determine initial category and label alignment. After analyzing the results, I created two new versions and ran a second closed sort test, which revealed key structural insights and strong label preferences. Based on the results from this test, I put together a revised model and presented my recommendations to our clinical health team.

Results:

Testing helped me gain better understanding of audience mental models and provided the data I needed to push back against confusing terminology and categorization suggestions our clinical team originally provided. Armed with test results and well-informed recommendations, I was able to make a successful case for restructuring and relabeling our content in a way that made the most sense to our members.